Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

WWDC25 and Google I/O 2025 are now in our rear-view, but the hot takes are still swirling. To cut through the noise, we wanted to take a step back, reflect on recent announcements from both Apple and Google, and provide actionable insights for product owners and decision-makers this year.

The future of mobile apps is becoming clearer — the mobile ecosystem is undergoing a pivotal transformation, driven by parallel advancements from both platforms. With new design systems, enhanced on-device AI capabilities, deeper system integration, and expanded multi-platform support, the opportunities to create innovative, user-centric experiences have never been more impactful. However, these changes also challenge increasingly outdated approaches. This article explores this year's shared themes from Apple and Google and explains why adopting these new features is essential for staying relevant, reducing costs, and delivering exceptional user experiences.

Let’s dive into the four key themes to prioritize for discussion at your next team meeting.

Apple has always been design-forward, but their last major design system overhaul was over a decade ago, with iOS 7. In this year’s WWDC Keynote, Apple SVP of Software Engineering Craig Federighi said iOS 26 (coming this fall) will dictate their design language for years to come. Google is joining Apple in taking a clear and distinct stance on Android design language that prioritizes usability and accessibility.

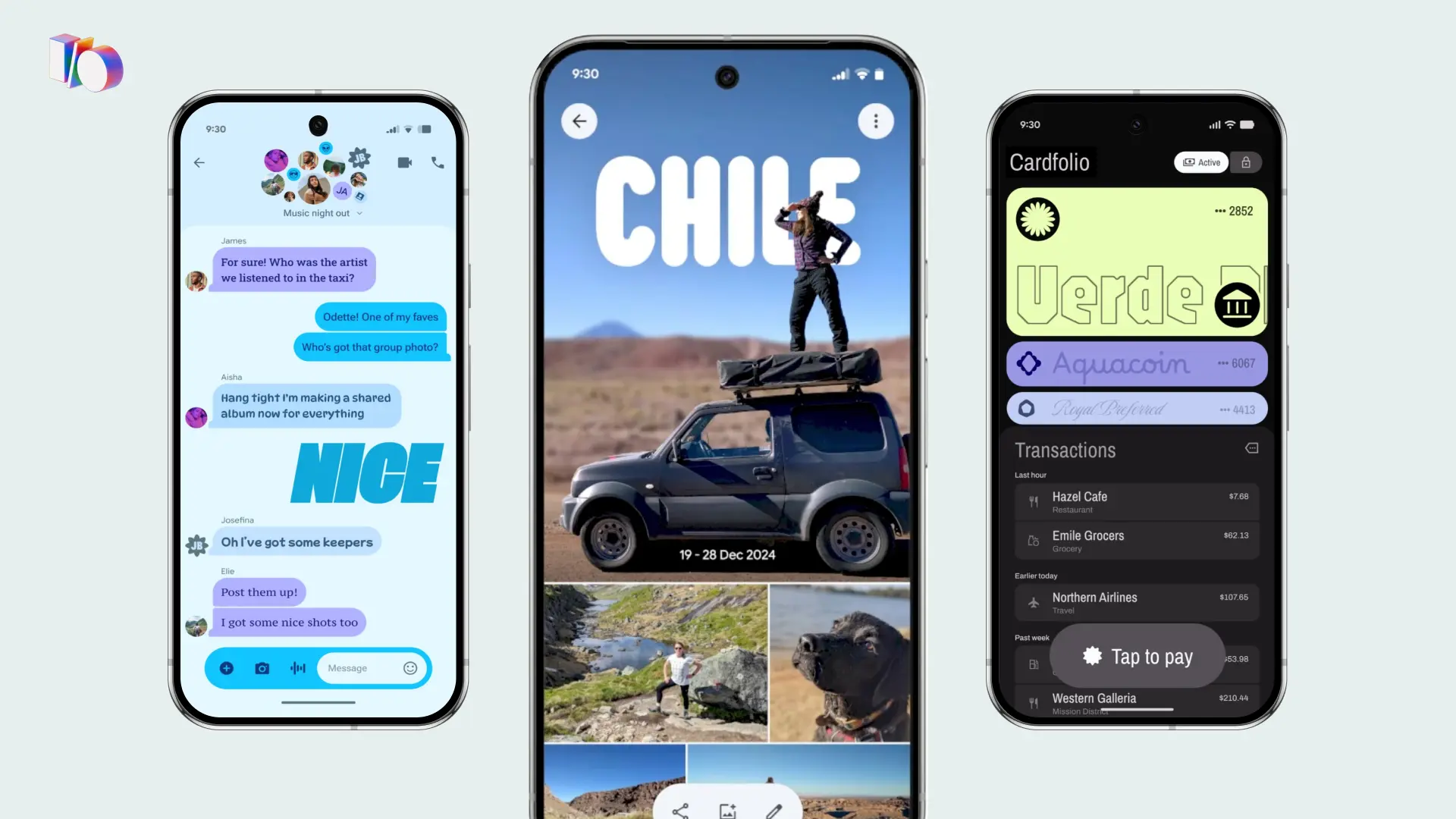

Apple introduced the Liquid Glass design language, which “gives you a more consistent experience across your apps and devices, making everything you do feel fluid.” We've used the Beta version and can attest to that seamless feel and the delight it provides users. Lessons learned from VisionOS will carry over to all devices, and you’ll see built-in accessibility improvements, new tab bar positioning with search integration, and platform-specific visual elements.

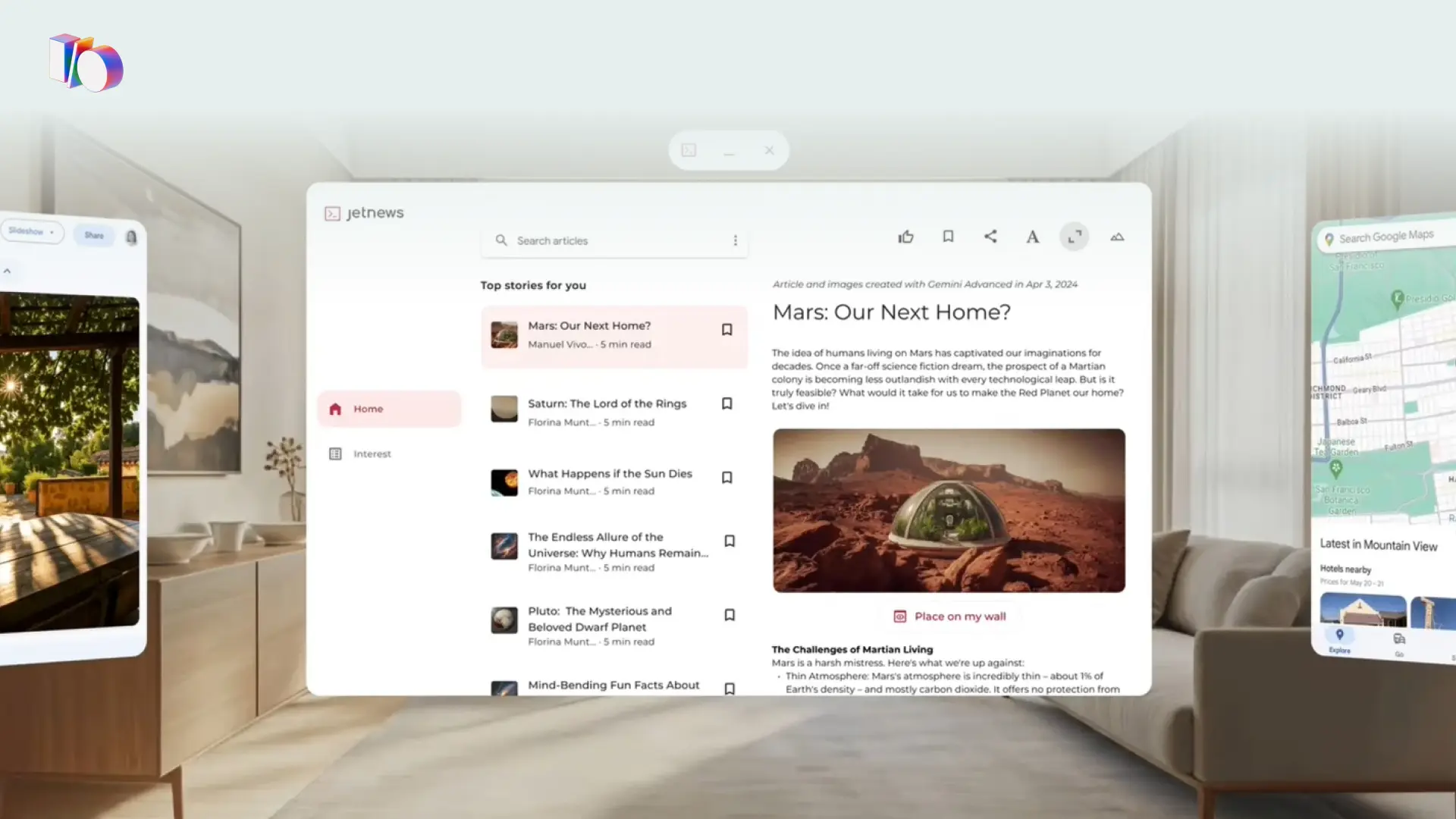

Google launched Material 3 Expressive Design, which “provides new ways to make your product more engaging, easy to use, and desirable.” Sound familiar? This big shift in Google’s design language emphasizes bold colors, bigger buttons, and dynamic elements — all research-backed improvements that promise faster navigation for users of all ages. Poring over the imagery provided by Google, it’s easy to see how they’re infusing fun into even the smallest interactions.

Adopting platform-specific design systems enhances app usability, reduces development costs, and ensures compliance with modern accessibility standards. We recommend leaning into unique platform conventions rather than forcing cross-platform visual consistency. “But what about differentiating my brand?” you might ask. Be careful not to conflate differentiation and customization, which could create friction with the rest of the platform experience already embraced by the user. Custom components might feel more clunky or more dated or just plain out of place more quickly than ever. Aligning your app with each platform design helps future-proof your apps and allows your teams to deliver a better user experience while reducing maintenance overhead. You’re building in cost savings by leveraging Apple and Google’s toolsets.

Instead of locking onto customization, uphold brand differentiation through relevant, quality content and creative use of new tools. By aligning with platform-specific features (spoiler: that’s theme #3 below), you’re freeing resources to innovate in areas like unique functionality, user engagement, and personalized experiences. This approach ensures apps not only look cutting-edge but also deliver value in ways that matter most to users.

Apple and Google will introduce and enhance accessible on-device AI capabilities, offering local LLM processing, enhanced privacy options, and third-party support to enable smarter, faster, and more personalized app experiences.

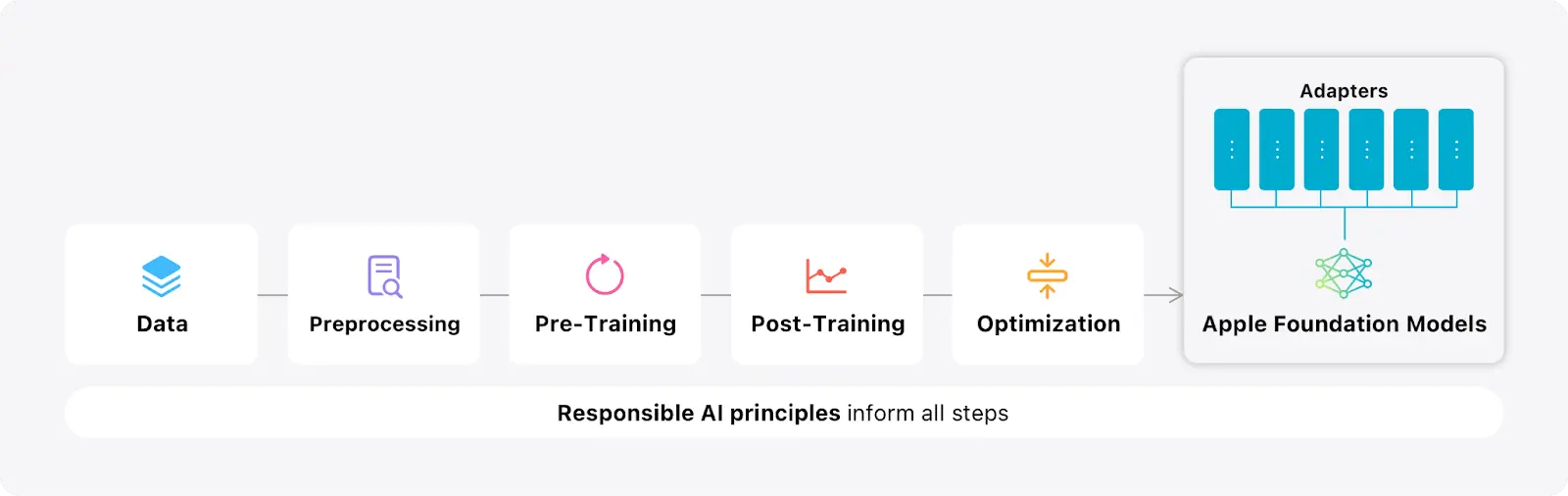

Apple says they will provide on-device LLM access via their Foundation Models framework, enabling apps to leverage AI capabilities such as natural language processing, summarization, and content generation. This approach will allow developers to integrate advanced AI features while prioritizing user privacy and reducing latency.

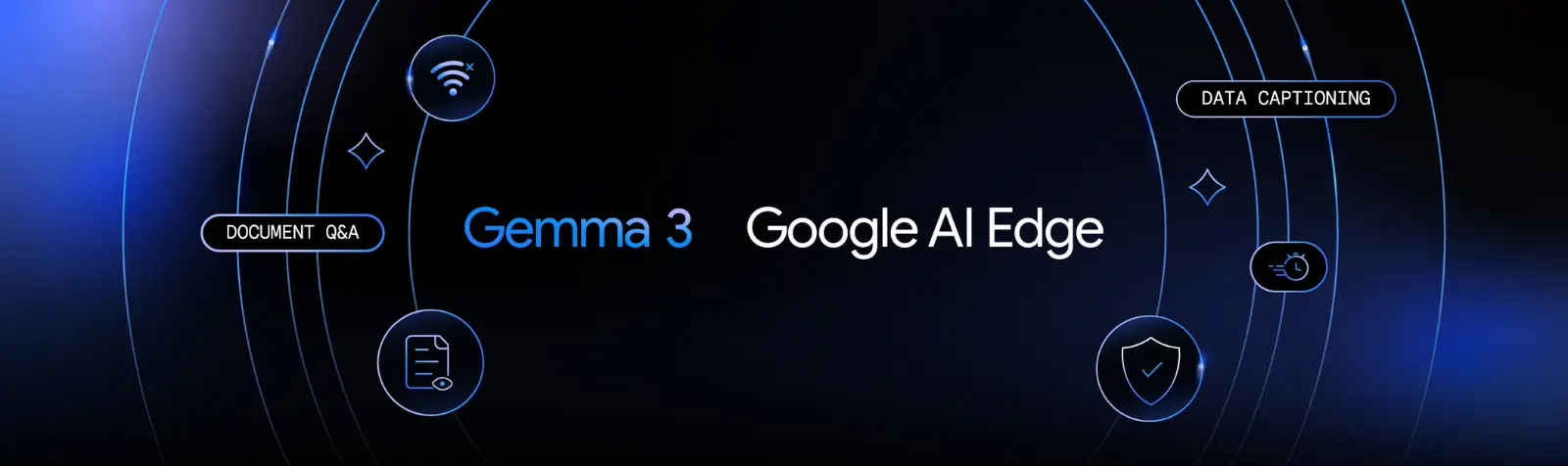

Google is expanding on-device AI capabilities for Android developers with the introduction of Gemma 3, accessible through Google AI Edge. This update includes Gemma 3 1B, a compact yet powerful model, designed specifically for mobile and web applications. The advancement will allow developers to integrate small language models (SLMs) directly into their apps, balancing quick download times, rapid execution, and broad device compatibility.

While both tech giants made ambitious AI promises in 2024, this year marks a notable shift from rhetoric to reality. Apple's cautious rollout of 2024 announcements and Google's broad but (some say) shallow deployment of Gemini left developers wanting more. However, 2025's announcements demonstrate meaningful progress in on-device AI capabilities that developers can actually implement, reducing latency, lowering costs, and harnessing Apple and Google’s stringent privacy standards, enabling real-time, personalized features.

And personalization really is about to get exciting! One of the most compelling advancements is the ability for on-device LLMs to interface directly with your app's functionality through tool calling. This enables sophisticated natural language interactions that can trigger specific actions within your app. Potential use cases should be super-inspiring for product owners and developers.

Imagine a marketplace e-commerce app with voice control. The user says, "Look up my first delivery for the day and mark it as In Progress.” Then, having exposed tools “lookUpOrder" and “updateOrderStatus" to the LLM, the system could do that work, saving the user time.

Or for a smart home app, a user could say, "We're about to watch The Little Mermaid. Set the scene in the living room.” Accessing tools like "setLightHue,” "setLightBrightness,” "setLightPowerState,” etc., an on-device LLM could interpret the natural language request, turn on the living room lights, dim them, then set them to deep sea blues and reds to set the mood for movie-watching.

By exposing your app's core functions as tools that the LLM can access, you can transform complex multi-step processes into simple, conversational interactions, creating more intuitive, efficient user experiences. While this technology is still evolving, early experimentation will position your app to meet rising user expectations.

On-device LLMs will also significantly streamline the development process in two key ways:

We're still at the ground floor of this technology, but that’s all the more reason to experiment and stay ahead of the pack.

Both Apple and Google are enhancing system integration features, enabling apps to deeply embed into platform ecosystems through features like live updates, widgets, and intents.

Apple enhanced its system integration capabilities with Visual Intelligence, enabling users to interact with and query on-screen content naturally. They also enhanced App Intents with App Intent snippets, a feature which allows users to initiate app workflows via voice commands and complete them through overlay interfaces — all without leaving their current context. These updates, combined with new convenience methods for exposing app data to the system, make it easier for developers to create seamless, contextual experiences.

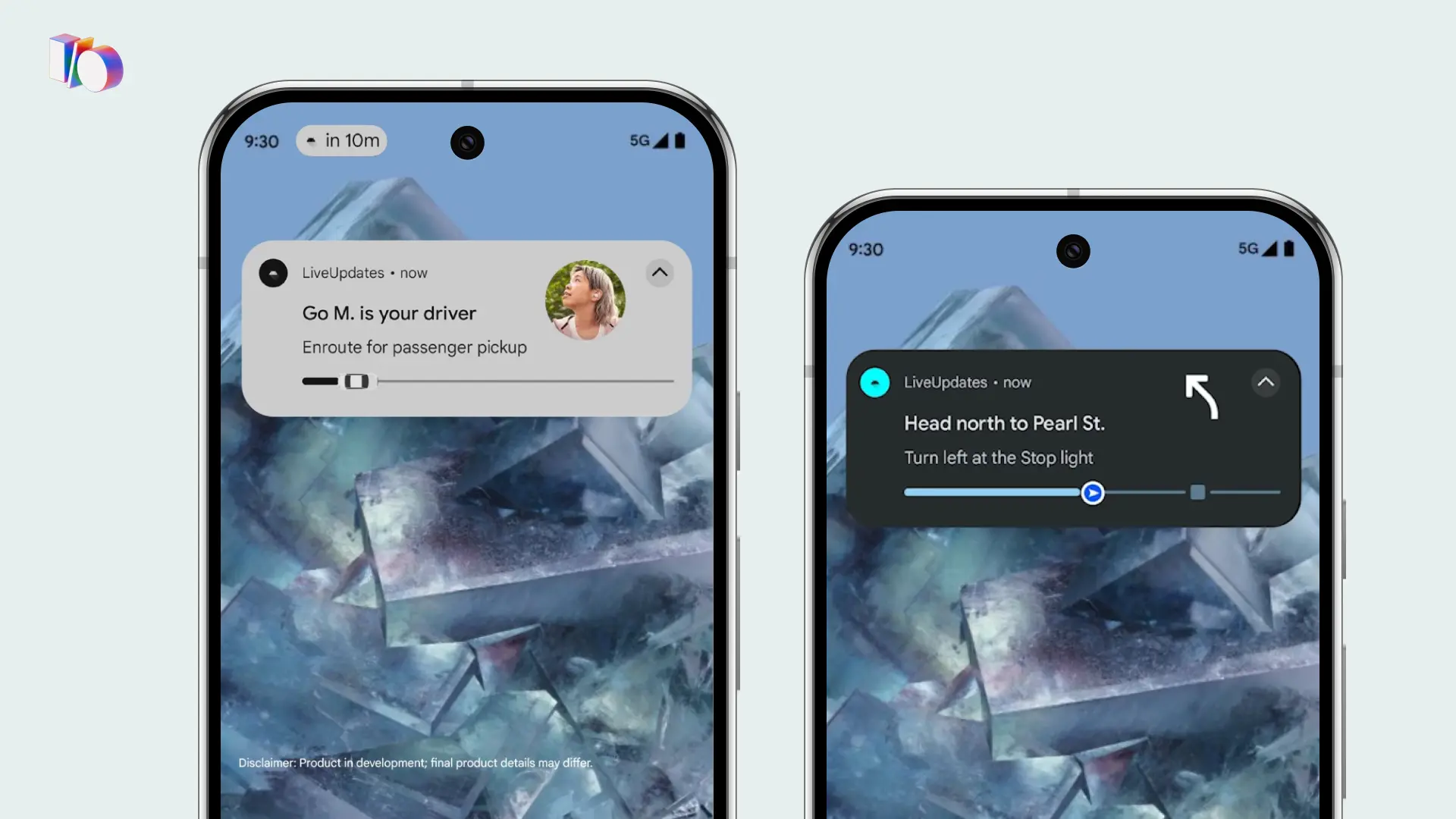

Google launched Live Updates, bringing real-time notification capabilities to Android devices for the first time, achieving parity with iOS in this area. This release was accompanied by expanded support for widgets, Intents, and deeper system-level integrations, enabling apps to surface timely information and functionality throughout the Android ecosystem.

System integration capabilities are evolving rapidly, with on-device LLMs poised to significantly enhance user experiences in the near future. While the full potential of intuitive, intent-driven interactions is still developing, both Google and Apple are making strides in advancing this concept of "intents," which prioritizes user desires over traditional app navigation.

Currently, apps can “advertise” their capabilities to the system — ordering food, booking rides, managing communications, etc. — enabling users to accomplish tasks through shortcuts and basic natural language commands. For instance, users can set up shortcuts to order their favorite coffee or check flight statuses with minimal interaction.

Looking ahead to 2026, Apple plans to roll out more advanced Apple Intelligence-powered app intents. These enhancements promise to deliver more sophisticated, context-aware interactions. In the future, users might be able to say, "Order me a caramel macchiato from Starbucks, and add what Zach wanted in his last text," with the system intelligently coordinating between relevant apps to execute the request seamlessly.

To be ready, we recommend a two-pronged approach:

Additionally, on the topic of differentiating brand content, prioritize surfacing the content that matters most to users in these integrations to maximize impact. In the evolving mobile landscape, you may have fewer opportunities to expose a user to messaging within your app, but you will be associating your brand with a more efficient, satisfying, and personalized experience than a competitor that doesn’t adapt to this shift.

Apple and Google are expanding support for multi-device and cross-platform experiences, enabling apps to seamlessly function across watches, TVs, XR devices, desktops, tablets, and more.

Apple introduced desktop-like functionality for the iPad with advanced windowing systems, as well as Vision Pro support, allowing SwiftUI apps to work effortlessly on XR devices.

Google enhanced Android's external display support and extended Compose compatibility to XR devices, making multi-device app experiences more accessible.

The trend of meeting users where they are continues with this shift toward multimodal experiences. Today's users seamlessly transition between devices, expecting consistent, device-optimized experiences across their entire digital ecosystem. Apple and Google's commitment to cross-device functionality signals a clear direction for the future of digital experiences. They're laying the groundwork for undisclosed technologies, making early adoption crucial for future-proofing your digital products.

Platform-native tools like SwiftUI and Kotlin for Jetpack Compose have dramatically reduced the complexity of building these cross-device experiences, so it's easier than ever to deliver truly unified applications. We recommend exploring multi-platform possibilities now, even if your app's primary use case doesn't immediately translate to every device. Start by developing minimum viable experiences for various platforms. This approach will yield valuable insights into how your core functionalities can adapt across different interfaces and user contexts. A proactive stance ensures you'll be ready to capitalize on new opportunities in the evolving digital landscape.

We're witnessing a renaissance in mobile technology that extends far beyond traditional smartphones. All devices are becoming more intelligent, more contextually aware, and more deeply integrated into our daily lives. With new design systems like Google's Material 3 Expressive Design and Apple's Liquid Glass bringing more personality and sophistication to user interfaces, we're moving beyond the era of flat design into more engaging, dynamic experiences.

This evolution is about creating more intuitive, accessible, and delightful user experiences across all platforms … all while reducing development costs. By embracing these new capabilities and design paradigms now, you're not just updating your app; you're positioning your digital product for the next wave of innovation. The future of mobile is expansive, intelligent, and everywhere.

One email, once a month.